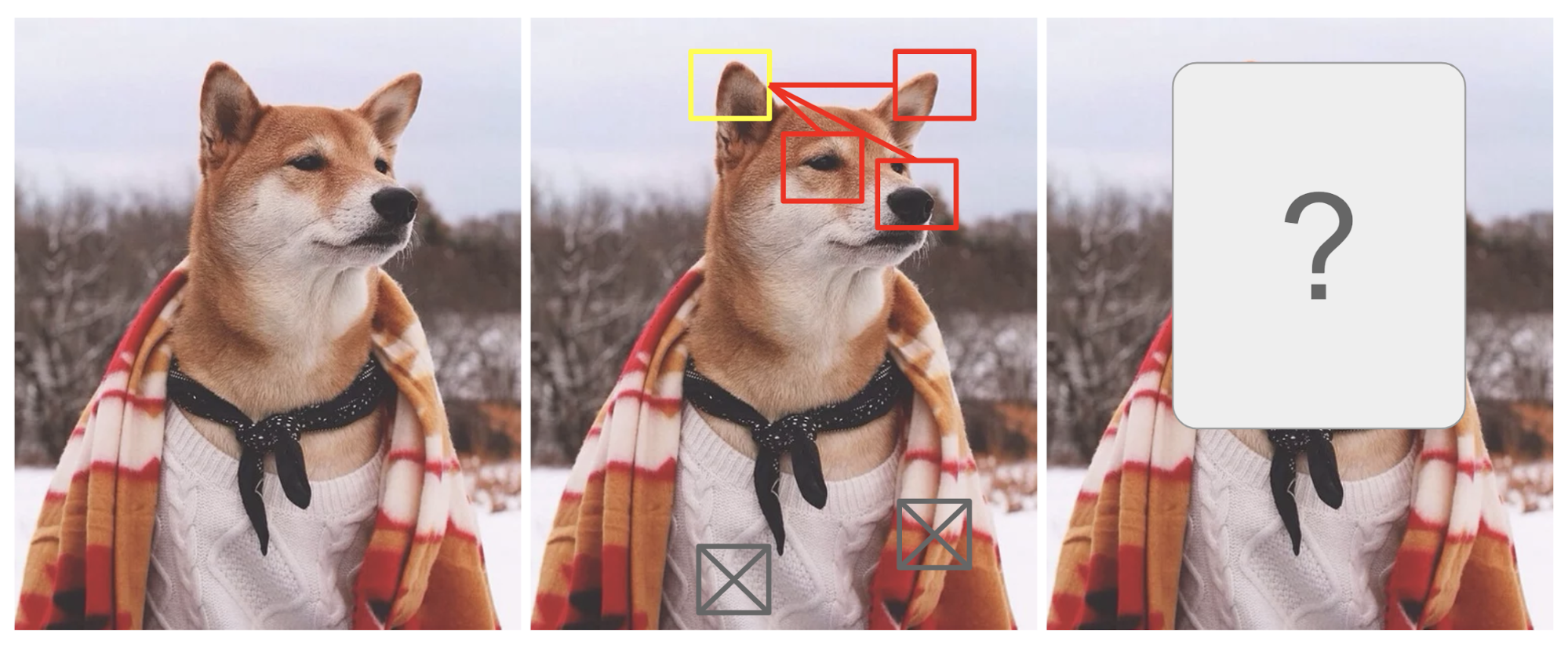

Microsoft AI Proposes 'FocalNets' Where Self-Attention is Completely Replaced by a Focal Modulation Module, Enabling To Build New Computer Vision Systems For high-Resolution Visual Inputs More Efficiently - MarkTechPost

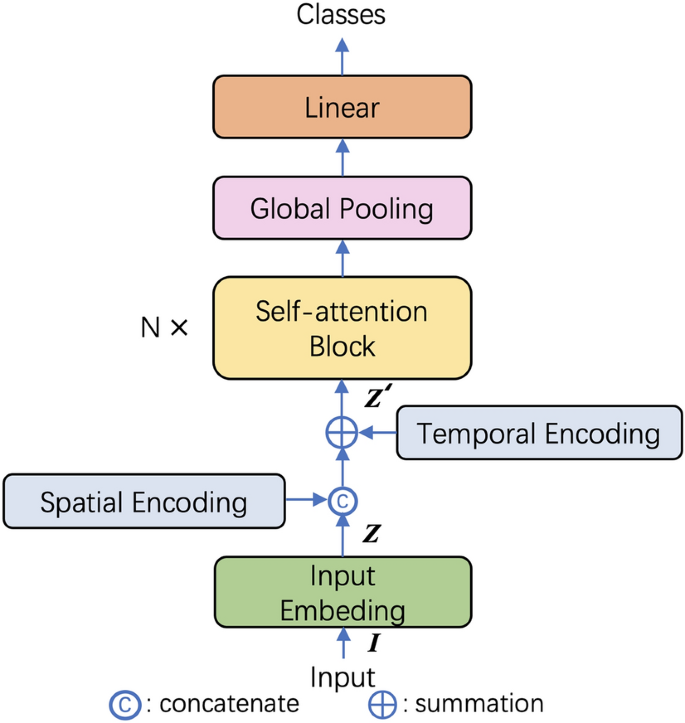

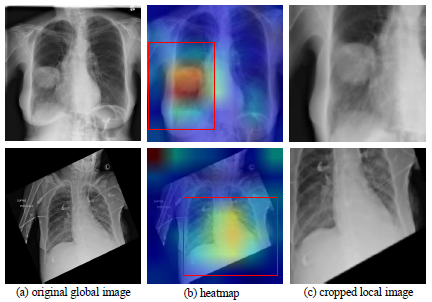

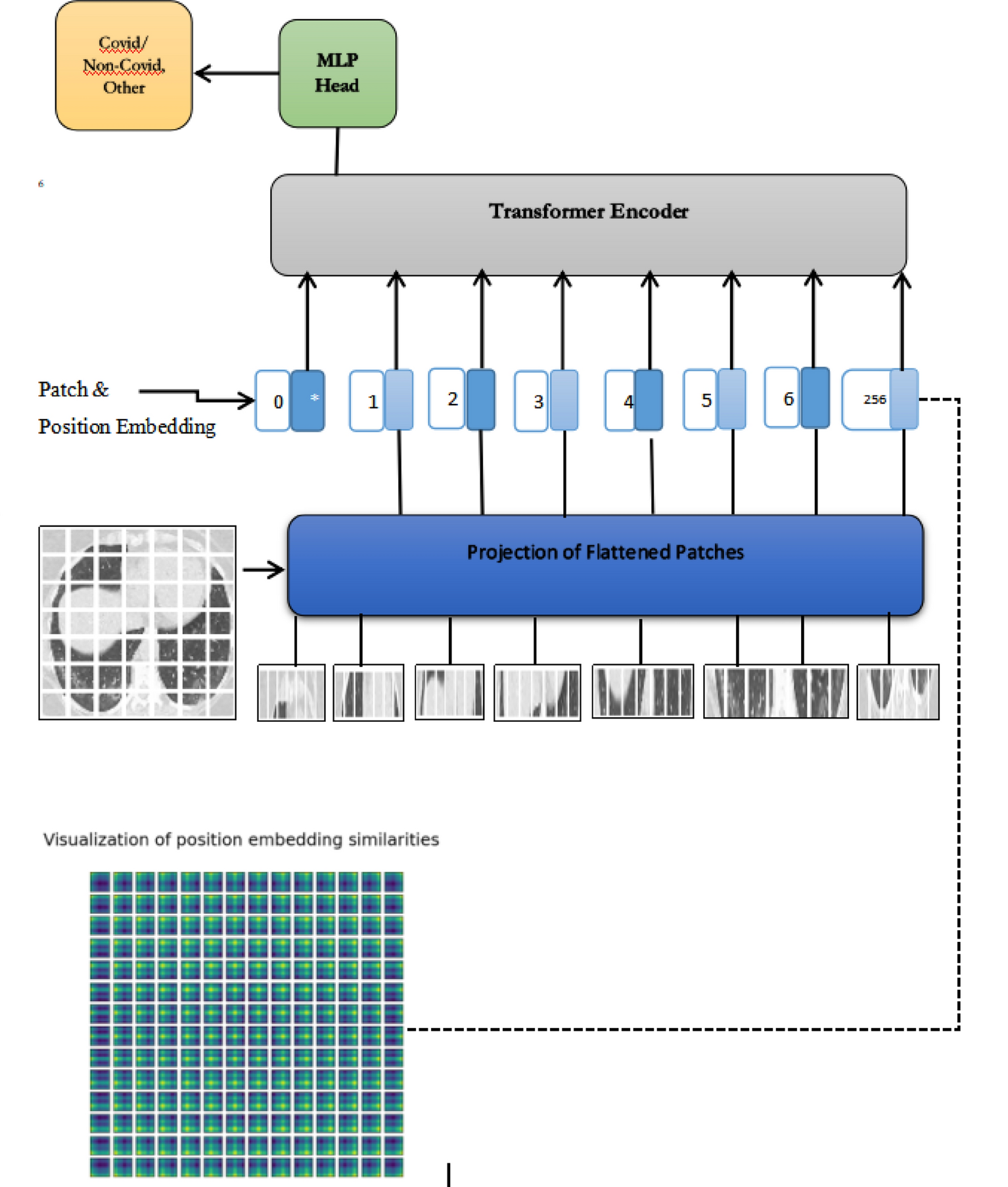

Spatial self-attention network with self-attention distillation for fine-grained image recognition - ScienceDirect

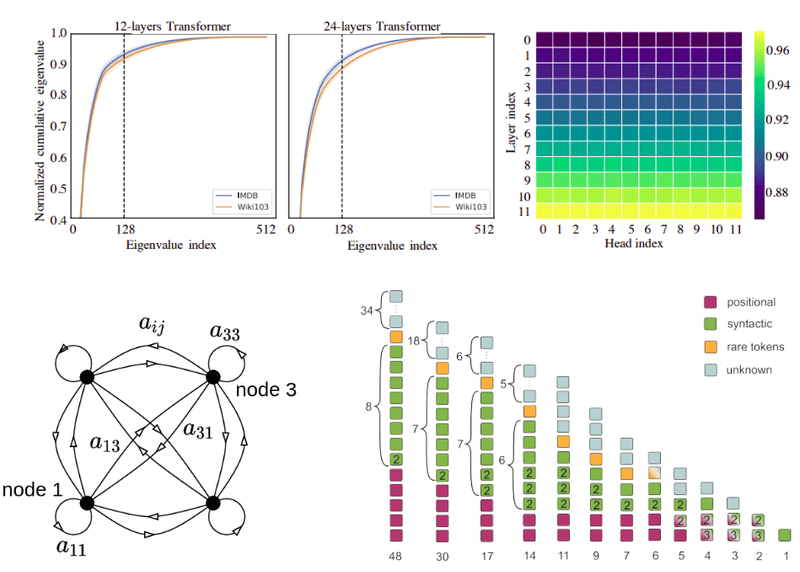

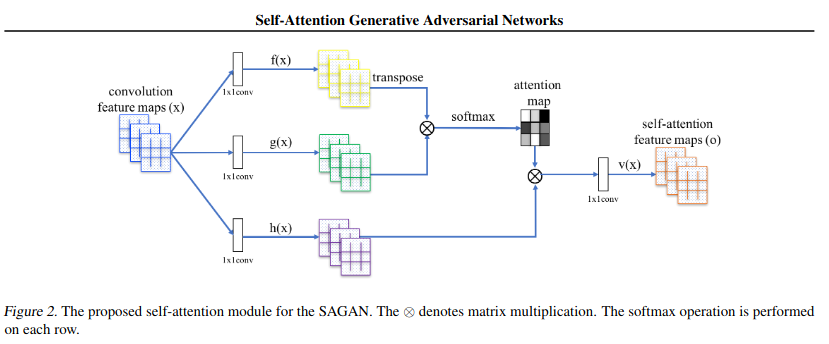

How Attention works in Deep Learning: understanding the attention mechanism in sequence models | AI Summer